Re-engineering Trust Stack for the Agentic Finance

Every major evolution in finance has introduced not only new capabilities, but also new risks. The introduction of digital money required cybersecurity. The rise of online banking demanded encryption and identity verification. Now, as AI agents step into the financial arena—initiating transactions, negotiating prices, managing assets—the risk landscape shifts once again.

This time, the threat surface is not a compromised password or a stolen card number. It’s the possibility that your agent itself—the code acting on your behalf—might act beyond intent, get exploited, or evolve in ways you didn’t authorize. For the first time in history, trust in finance must extend to autonomous software.

A New Risk Landscape

Google's Agent Payments Protocol (AP2) recognizes what the world is only beginning to grasp: introducing agents into payments transforms the structure of financial trust itself. Delegated, asynchronous, and context-aware transactions break every assumption embedded in traditional rails.

- User asynchronicity: The user may not be present when the transaction occurs. Trust now depends on verifiable mandates, not button clicks.

- Delegated authority: An agent acts on behalf of a human—yet the human may be offline, asleep, or half a world away.

- Indirect trust chains: Credential providers, merchants, and networks must rely on each other through agent-mediated exchanges, rather than direct relationships.

- Agent identity: A new layer of authentication emerges—one that verifies not the human, but the model, its version, and its runtime integrity.

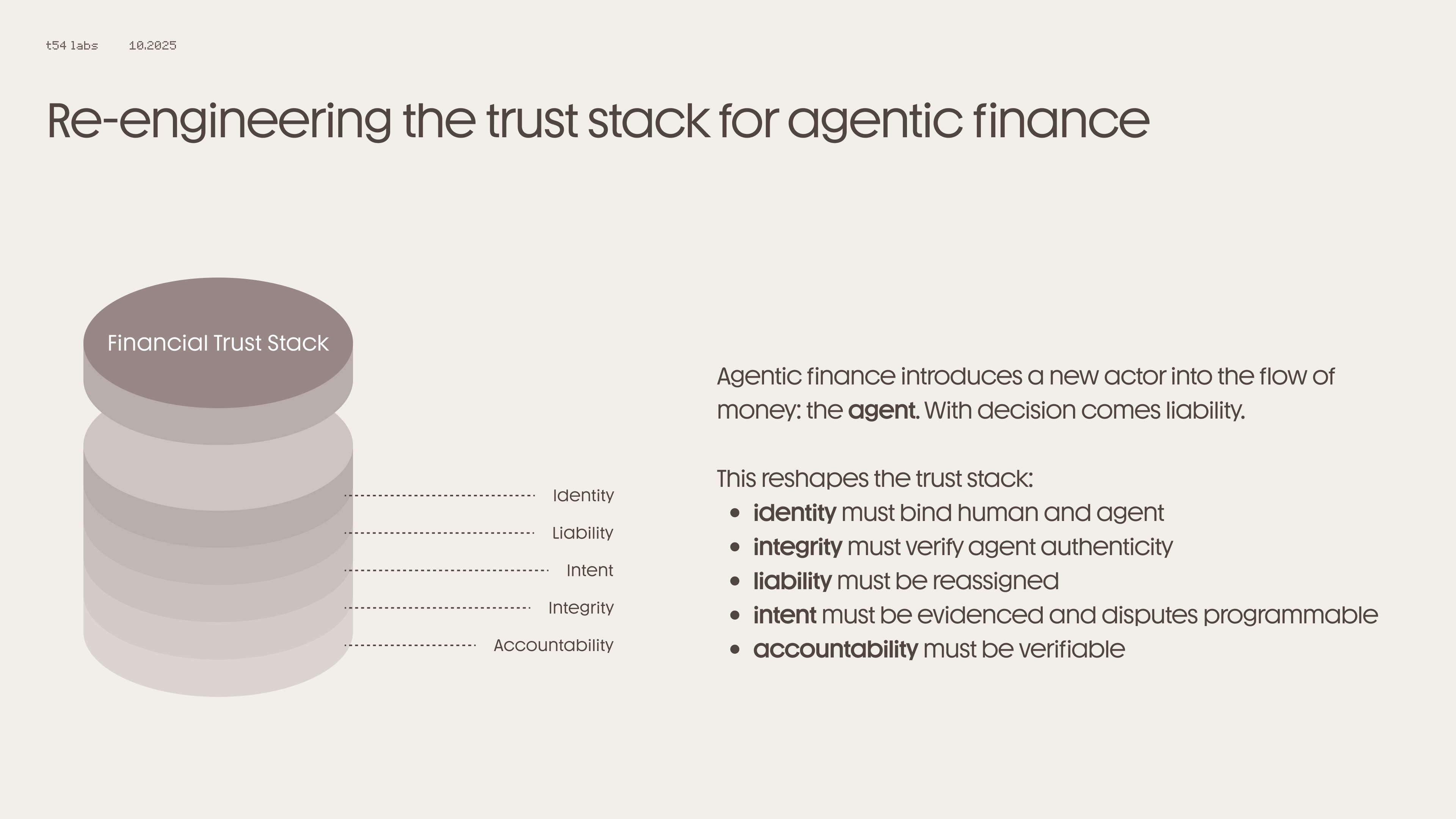

To bridge it, the trust stack itself must evolve.

The Emerging Gaps

1. Human–Agent Identity Binding

Today’s compliance frameworks verify faces, IDs, and documents. But they don’t verify the code acting on behalf of a human. As AI agents gain authority to transact, identity must extend beyond biometrics and signatures—into model provenance, version control, and runtime verification. Without this binding, authorization becomes meaningless.

2. Intent and Delegation Proof

In a delegated world, “Did the user mean to do this?” becomes a cryptographic question, not a support ticket. Every autonomous action—from a payment to a portfolio trade—needs verifiable intent proofs tied to merchant, asset, and time. Logs or screenshots aren’t enough; intent must be evidenced and traceable through signed mandates.

3. Integrity and Runtime Drift

Unlike static software, agents change. They retrain, update, and adapt. An approved model in March may behave differently by June. Without continuous runtime attestation—verifying that the executing agent is the same trusted identity originally approved—integrity fades quietly, and risk compounds invisibly.

4. Reassigning Liability

Existing networks—EMV, 3DS, ISO 20022—were designed for human clicks. When an agent mis-executes a payment, who bears responsibility? The merchant, issuer, or model? The future of financial accountability depends on programmable liability, where recourse and disputes are encoded as verifiable logic, not as after-the-fact investigations.

From Risk to Resilience

Agentic finance doesn’t just require automation—it requires accountability as code. The transition from human to agentic trust will be as transformative as the shift from paper to digital money. But without new layers of verification, liability, and integrity, the promise of agentic commerce could collapse under its own risk.

At t54, our mission is to prevent that collapse. By re-engineering the trust stack—from identity to accountability—we make agentic finance not just possible, but trustworthy.

Updated about 2 months ago